by Stuart Russell and Daniel Susskind*

Current trends in AI are nothing if not remarkable. Day after day, we hear stories about systems and machines taking on tasks that, until very recently, we saw as the exclusive and permanent preserve of humankind: making medical diagnoses, drafting legal documents, designing buildings, and even composing music.

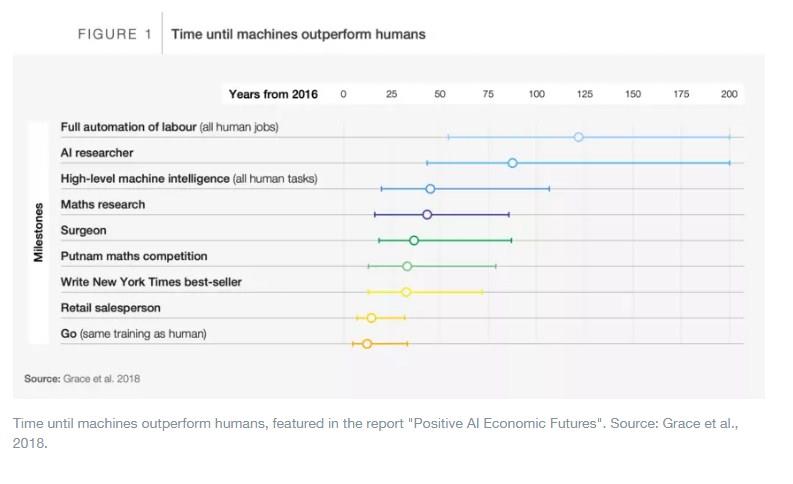

Our concern here, though, is with something even more striking: the prospect of high-level machine intelligence systems that outperform human beings at essentially every task. This is not science fiction. In a recent survey the median estimate among leading computer scientists reported a 50% chance that this technology would arrive within 45 years.

Importantly, that survey also revealed considerable disagreement. Some see high-level machine intelligence arriving much more quickly, others far more slowly, if at all. Such differences of opinion abound in the recent literature on the future of AI, from popular commentary to more expert analysis.

Yet despite these conflicting views, one thing is clear: if we think this kind of outcome might be possible, then it ought to demand our attention. Continued progress in these technologies could have extraordinarily disruptive effects – it would exacerbate recent trends in inequality, undermine work as a force for social integration, and weaken a source of purpose and fulfilment for many people.

Experts gather to share their AI visions

In April 2020, an ambitious initiative called Positive AI Economic Futures was launched by Stuart Russell and Charles-Edouard Bouee, both members of the World Economic Forum’s Global AI Council (GAIC). In a series of workshops and interviews, over 150 experts from a wide variety of backgrounds gathered virtually to discuss these challenges, as well as possible positive Artificial Intelligence visions and their implications for policymakers.

Those included Madeline Ashby (science fiction author and expert in strategic foresight), Ken Liu (Hugo Award-winning science fiction and fantasy author), and economists Daron Acemoglu (MIT) and Anna Salomons (Utrecht), among many others. What follows is a summary of these conversations, developed in the Forum’s report Positive AI Economic Futures.

What will constitute ’work’ in a future

Participants were divided on this question. One camp thought that, freed from the shackles of traditional work, humans could use their new freedom to engage in exploration, self-improvement, volunteering, or whatever else they find satisfying. Proponents of this view usually supported some form of universal basic income (UBI), while acknowledging that our current system of education hardly prepares people to fashion their own lives, free of any economic constraints.

The second camp in our workshops and interviews believed the opposite: traditional work might still be essential. To them, UBI is an admission of failure – it assumes that most people will have nothing of economic value to contribute to society. They can be fed, housed, and entertained – mostly by machines – but otherwise left to their own devices.

People will be engaged in supplying interpersonal services that can be provided – or which we prefer to be provided – only by humans. These include therapy, tutoring, life coaching, and community-building. That is, if we can no longer supply routine physical labour and routine mental labour, we can still supply our humanity. For these kinds of jobs to generate real value, we will need to be much better at being human – an area where our education system and scientific research base is notoriously weak.

So, whether we think that the end of traditional work would be a good thing or a bad thing, it seems that we need a radical redirection of education and science to equip individuals to live fulfilling lives or to support an economy based largely on high-value-added interpersonal services. We also need to ensure that the economic gains born of AI-enabled automation will be fairly distributed in society.

Six AI scenarios that could build a positive future

One of the greatest obstacles to action is that, at present, there is no consensus on what future we should target, perhaps because there is hardly any conversation about what might be desirable. This lack of vision is a problem because, if high-level machine intelligence does arrive, we could quickly find ourselves overwhelmed by unprecedented technological change and implacable economic forces. This would be a vast opportunity squandered.

For this reason, the workshop attendees and interview participants, from science-fiction writers to economists and AI experts, attempted to articulate positive visions of a future where Artificial Intelligence can do most of what we currently call work.

These scenarios represent possible trajectories for humanity. None of them, though, is unambiguously achievable or desirable. And while there are elements of important agreement and consensus among the visions, there are often revealing clashes, too.

1. Shared economic prosperity

The economic benefits of technological progress are widely shared around the world. The global economy is 10 times larger because AI has massively boosted productivity. Humans can do more and achieve more by sharing this prosperity. This vision could be pursued by adopting various interventions, from introducing a global tax regime to improving insurance against unemployment.

2. Realigned companies

Large companies focus on developing AI that benefits humanity, and they do so without holding excessive economic or political power. This could be pursued by changing corporate ownership structures and updating antitrust policies.

3. Flexible labour markets

Human creativity and hands-on support give people time to find new roles. People adapt to technological change and find work in newly created professions. Policies would focus on improving educational and retraining opportunities, as well as strengthening social safety nets for those who would otherwise be worse off due to automation.

4. Human-centric AI

Society decides against excessive automation. Business leaders, computer scientists, and policymakers choose to develop technologies that increase rather than decrease the demand for workers. Incentives to develop human-centric AI would be strengthened and automation taxed where necessary.

5. Fulfilling jobs

New jobs are more fulfilling than those that came before. Machines handle unsafe and boring tasks, while humans move into more productive, fulfilling, and flexible jobs with greater human interaction. Policies to achieve this include strengthening labour unions and increasing worker involvement on corporate boards.

6. Civic empowerment and human flourishing

In a world with less need to work and basic needs met by UBI, well-being increasingly comes from meaningful unpaid activities. People can engage in exploration, self-improvement, volunteering or whatever else they find satisfying. Greater social engagement would be supported.

The intention is that this report starts a broader discussion about what sort of future we want and the challenges that will have to be confronted to achieve it. If technological progress continues its relentless advance, the world will look very different for our children and grandchildren. Far more debate, research, and policy engagement are needed on these questions – they are now too important for us to ignore.

*Professor of Computer Science, Director of the Center for Human-Compatible AI, University of California, Berkeley and fellow in Economics, Oxford University, & Visiting Professor, King’s College, London

**first published in: www.weforum.org

By: N. Peter Kramer

By: N. Peter Kramer