by Pascale Fung and Hubert Etienne*

As a driving force in the Fourth Industrial Revolution, AI systems are increasingly being deployed in many areas of our lives around the world. A shared realisation about their societal implications has raised awareness about the necessity to develop an international framework for the governance of AI, more than 160 documents aim to contribute by proposing ethical principles and guidelines. This effort faces the challenge of moral pluralism grounded in cultural diversity between nations.

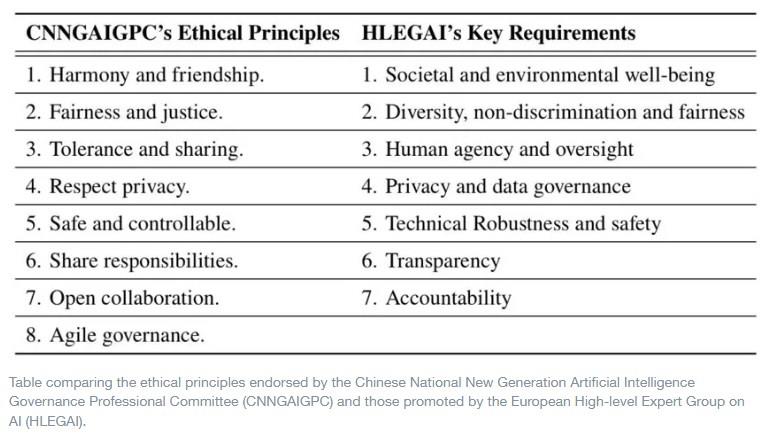

To better understand the philosophical roots and cultural context underlying these challenges, we compared the ethical principles endorsed by the Chinese National New Generation Artificial Intelligence Governance Professional Committee (CNNGAIGPC) and those promoted by the European High-level Expert Group on AI (HLEGAI).

Collective vs individualistic view of cultural heritage

In many aspects the Chinese principles seem similar to the EU’s, both promoting fairness, robustness, privacy, safety and transparency. Their prescribed methodologies however reveal clear cultural differences.

The Chinese guidelines derive from a community-focused and goal-oriented perspective. “A high sense of social responsibility and self-discipline” is expected from individuals to harmoniously partake into a community promoting tolerance, shared responsibilities and open collaboration. This emphasis is clearly informed by the Confucian value of “harmony”, as an ideal balance to be achieved through the control of extreme passions – conflicts should be avoided. Other than a stern admonition against “illegal use of personal data”, there is little room for regulation. Regulation is not the aim of these principles, which are rather conceived to guide AI developers in the “right way” for the collective elevation of society.

The European principles, emerging from a more individual-focused and rights-based approach, express a different ambition, rooted in the Enlightenment and coloured by European history. Their primary goal is to protect individuals against well identified harms. Whereas the Chinese principles emphasize the promotion of good practices, the EU focuses on the prevention of malign consequences. The former draws a direction for the development of AI, so that it contributes to the improvement of society, the latter sets the limitations to its uses, so that it does not happen at the expense of certain people.

This distinction is clearly illustrated by the presentation of fairness, diversity and inclusiveness. While the EU emphasizes fairness and diversity with regard to individuals from specific demographic groups (specifying gender, ethnicity, disability, etc.), Chinese guidelines urge for the upgrade of “all industries”, reduction of “regional disparities” and prevention of data monopoly. While the EU insists on the protection of vulnerable persons and potential victims, the Chinese encourage “inclusive development through better education and training, support”.

In the promotion of these values, we also recognize two types of moral imperatives. Centred on initial conditions to fulfil, the European requirements express a strict abidance by deontologist rules in the pure Kantian tradition. In contrast, and as referring to an ideal to aim, the Chinese principles expresses softer constraints that could be satisfied on different levels, as part of a process to improve society. For the Europeans the development of AI “must be fair”, for the Chinese it should “eliminate prejudices and discriminations as much as possible”. The EU “requires processes to be transparent”, China’s requires them to “continuously improve” transparency.

Utopian vs dystopian view

Even when promoting the same concepts in a similar way, Europeans and Chinese mean different things by “privacy” and “safety”.

Aligned with the The General Data Protection Regulation (GDPR), the European promotion of privacy encompasses the protection of individual’s data from both state and commercial entities. The Chinese privacy guidelines in contrast only target private companies and their potential malicious agents. Whereas personal data is strictly protected both in the EU and in China from commercial entities, the state retains full access in China. Shocking to Europeans, this practice is readily accepted by Chinese citizens, accustomed to living in a protected society and have consistently shown the highest trust in their government. Chinese parents routinely have access to their children’s personal information to provide guidance and protection. This difference goes back to the Confucian tradition of trusting and respecting the heads of state and family.

With regards to safety, the Chinese guidelines express an optimism which contrasts with the EU’s more pessimistic tone. They approach safety as something that needs to be “improved continuously”, whereas the European vision urges for a “fall back plan”, associating the loss of control as a no-way back situation. The gap in the cultural representation of AI – perceived as a force for good in Asian cultures, and as a deep seated wariness of a dystopian technological future in the Western world – helps make sense of this difference. Robots are pets and companions in the utopian Chinese vision, they tend to become insurrectional machines as portrayed by a Western media heavily influenced by the cyberpunk subgenre of sci-fi, embodied by films like Blade Runner and The Matrix, and the TV series Black Mirror.

Where is the common ground on AI ethics?

Despite the seemingly different, though not contradictory, approaches on AI ethics from China and the EU, the presence of major commonalities between them points to a more promising and collaborative future in the implementation of these standards.

One of these is the shared tradition of Enlightenment and the Science Revolution in which all members of the AI research community are trained today. AI research and development is an open and collaborative process across the globe. The scientific method was first adopted by China among other Enlightenment values during the May Fourth Movement in 1919. Characterised as the “Chinese Enlightenment”, this movement resulted in the first ever repudiation of traditional Confucian values, and it was then believed that only by adopting Western ideas of “Mr. Science” and “Mr. Democracy” in place of “Mr. Confucius” could the nation be strengthened.

This anti-Confucian movement took place again during the Cultural Revolution which, given its disastrous outcome, is discredited. In the years since the third generation of Chinese leaders, the Confucian value of the “harmonious society” is again promoted as a cultural identity of the Chinese nation. Nevertheless, “Mr. Science” and “technological development” continue to be seen as a major engines for economic growth, leading to the betterment of the “harmonious society”.

Another common point between China and Europe relates to their adherence to the United Nations Sustainable Development goals. Both guidelines refer to some of these goals, including poverty and inequality reduction, gender equality, health and well-being, environmental sustainability, peace and justice, economic growth, etc., which encompass both societal and individual development and rights.

The Chinese and the EU guidelines on ethical AI may ultimately benefit from being adopted together. They provide different levels of operational details and exhibit complementary perspectives to a comprehensive framework for the governance of AI.

*Director of the Centre for Artificial Intelligence Research (CAiRE)& Professor of Electrical & Computer Engineering, The Hong Kong University of Science and Technology and Ph.D candidate in AI ethics, Ecole Normale Superieure, Paris

**first published in: www.weforum.org

By: N. Peter Kramer

By: N. Peter Kramer