by Sara Stratton and Beatrice Dias*

Although our future remains unwritten, each day we shape its foundation through our collective efforts, just as our past has paved the way to our present moment. Current debates on artificial intelligence (AI) and AI ethics focus primarily on the impact it is having on today’s populations – the fairness of using AI in recruitment, finance, and education, for example. But what about the equally important intergenerational impacts of today’s AI systems on future generations? Policymakers and regulators should pay attention to these issues.

Right now, a very narrow demographic of the world’s population holds decision-making power in the technology sector and the decisions they make are shaping our collective futures. Furthermore, people outside the tech industry are often seen purely as consumers and are left out of conversations about the technologies that impact their lives and livelihoods. This exacerbates imbalances in power and furthers exploitative conditions and injustices in our world. Without change at the decision-making level, therefore, there is a danger that AI will harm generations to come.

How AI perpetuates existing inequities

Consider, for example, the case of automated exam proctoring systems used to oversee examinations, confirming test-takers’ identities and ensuring the integrity of the exam environment. These applications have been particularly useful during the COVID-19 pandemic as many educational institutions switched to virtual platforms. There are emerging findings that demonstrate the harms and effects of these technologies, however. This builds on more than a decade of research by women and people of color who have been studying the inequitable impacts of everyday technologies.

Historically, US research shows significant racial bias in disciplinary actions taken in schools, where “black students are more likely to be seen as problematic and more likely to be punished than white students are for the same offence”, according to Princeton University’s Travis Riddle and Stacey Sinclair. It is tempting to assume that an automated, technical implementation of behavioural monitoring in schools would be free of these discriminatory actions, but research shows otherwise. As MIT researcher Joy Buolamwini notes: “AI is based on data, and data is a reflection of our history.” Her work, featured in the documentary Coded Bias, highlights “how machine-learning algorithms...can perpetuate society’s existing race-, class- and gender-based inequities,” according to an NYT review.

Underlying these types of tools and procedures is the normative social infrastructure formed decades ago, in which students are viewed as subjects to be monitored for infractions. These are ideas we need to scrutinise before we replicate such notions in emerging technological products. As Shea Swauger, an academic librarian and researcher at the University of Colorado Denver, points out: “Technology didn’t invent the conditions for cheating and it won’t be what stops it. The best thing we in higher education can do is to start with the radical idea of trusting students. Let’s choose compassion over surveillance.”

Mitigating harm now and in the future

When systems are digitally coded, they become a permanent digital record of judgement that can be tied to a person for life. “We have reached a tipping point where it will now be difficult to dislodge the normalisation of digital surveillance. Further, its effects may be long-lasting and multigenerational,” says Dr. Safiya Noble, an Associate Professor of Gender Studies and African American Studies at the University of California, Los Angeles (UCLA). So, how do we put in place structures that can mitigate existing and future harms from AI systems currently in place?

Regulation can play a crucial role in these efforts. Present-day economic gain for shareholders and investors has been the main value at the core of many of the technology innovations that have been growing rapidly over the past five decades. We need to think longer-term about the future of AI as it is implemented across a variety of industries over multiple generations. This is a crucial moment to innovate our approach to regulation by addressing intergenerational implications.

Typically, regulation is focused on trade-offs in the short or medium term. This approach often results in reactionary rather than proactive action to mitigate harms across generations. Viewing AI regulation from an intergenerational perspective requires us to first contend with the historical context of such technological systems. How have dominant ideologies and socio-political structures shaped these tools?

Within this framework of understanding, we can begin to unpack how AI and its associated physical and digital infrastructure might impact our current and future generations. Anticipating potential and overarching impacts of AI, from an intergenerational view, will move us closer to regulation that is responsive to emergent data and research, multi-stakeholder concerns, and global possibilities.

Current trends in AI regulation provide a promising path forward. In 2019, Aotearoa New Zealand worked in partnership with the World Economic Forum to develop a Re Imagining Regulation for AI process for the government, to ensure the trustworthy design and deployment of AI.

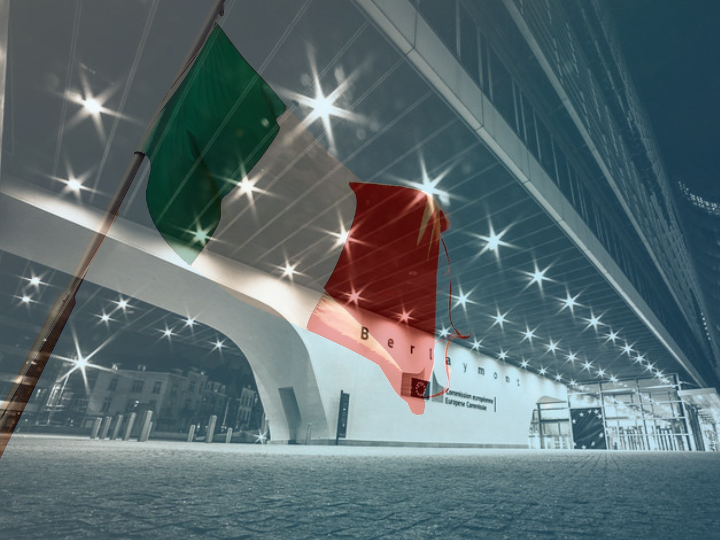

More recently, the US Federal Trade Commission released a bold set of guidelines on “truth, fairness, and equity” in AI in 2021. The European Commission also published a proposal for the regulation of AI earlier this year. These measures offer valuable approaches to improving transparency, accountability and impact assessments associated with AI systems. We can build on such procedures by incorporating more intergenerational, interdisciplinary and international voices in the conversation about impacts of AI across generations.

Regulation created through this lens could mitigate current harms and even prevent future damage. Indigenous wisdom teaches us to look at decision-making as a 500-year responsibility that foregrounds the well-being of people and planet, both past, present and future. We must heed these lessons if we want to build a healthy and thriving future, free from the short-term interests of today, for generations to come.

*Founder, Maori Lab and Assistant Professor, University of Pittsburgh

**first published in: www.weforum.org

By: N. Peter Kramer

By: N. Peter Kramer