by Carlos Hernandez-Echevarria*

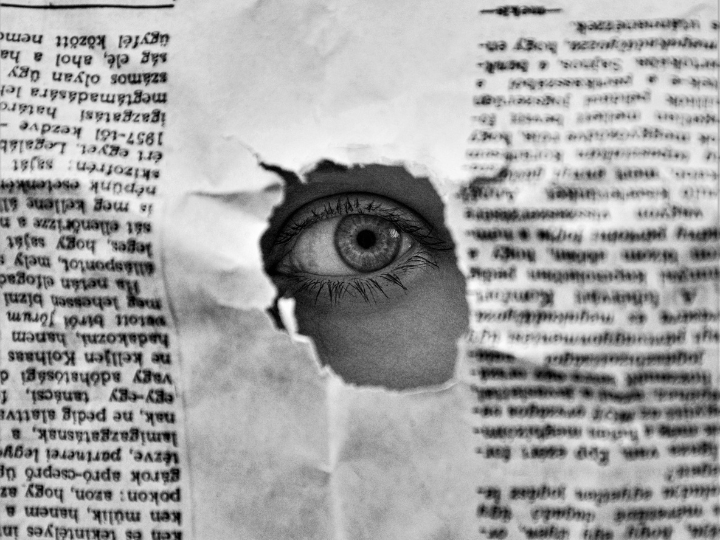

Regulation alone will not solve the huge disinformation problem Europe faces, but the incoming Digital Services Act can certainly help, writes Carlos Hernandez-Echevarria.

As the EU reflects on how to establish ground rules for big Internet companies, it needs to take into account how to encourage those platforms to contribute to a more fact-based public discourse, because in that lies one of our biggest challenges as a society: the quality of the information citizens have when they make decisions about our common future.

According to the Eurobarometer conducted in December 2019, more than 70% of European citizens over the age of 15 said that they had come across false information several times a month or even more often.

If that is not alarming enough, all evidence indicates that the pandemic that started shortly after has had a multiplying effect on disinformation: a recent study from the Reuters Institute found that the number of English-language fact-checks rose by 900% just from January to March 2020.

As the same study states, “the most common claims within pieces of misinformation concern the actions or policies that public authorities are taking to address COVID-19”, eroding confidence in public health measures and jeopardizing their effectiveness.

Before the pandemic, a majority of European citizens already declared that public authorities should be responsible for combatting mis- and disinformation, but any legislative action in that regard involves serious risks that have to be considered.

If any regulation has even the remote appearance of government trying to hinder freedom of speech by stating what is true and what is fake, it could do more harm than good. It will bolster precisely the kind of populist and not-fact-based arguments that it intends to oppose.

If the EU institutions want the Digital Services Act to be an effective tool against disinformation, the law ought to ensure transparency and collaboration among the main actors in this conversation: governments, academia, civil society, journalism and fact-checkers, and first and foremost, the platforms.

Effectively regulating the Internet will never be an easy task. It is a fluid, ever-changing, environment where the letter of the law can become obsolete very quickly. That is why it is all the more important to work with the platforms to enrich their curation and moderation processes.

As fact-checkers, we see everyday the oversized role that platforms such as Google, Facebook, YouTube and Twitter have in shaping public opinion. They continuously make judgements on content that should no longer be the result of a merely corporate, case-by-case, opaque decision-making process.

When they delete a post or attach an explanatory note to a piece of content -whether it is a tweet, a Facebook post or a Youtube video- that is a huge responsibility if they do so without taking into account independent verification processes.

When they evaluate if a content is misleading, or dangerous or plainly illegal, they are in fact making a decision that affects the fundamental right to freedom of speech. At the very least, they should be compelled by law to publicly explain how they make those decisions: which criteria are used? How are they measured? Are those decisions made by an algorithm or is human moderation applied?

We need transparency from those companies to learn about those processes and, ultimately, to make sure society has a say in redefining them so they have more credibility and therefore more effectiveness against disinformation.

If platforms involve academics, journalists, fact-checkers and public officials in designing and overseeing moderation and/or curation practices, all actors will avoid the perception of a single entity dictating which speech is acceptable and all users will know that decisions on content are made upon data and not perception.

As fact-checkers, we are already playing a prominent role in this fight for a more fact-driven civil discourse: in our daily work we address disinformation campaigns and provide fact-based, high-quality information for those platforms and the public to rely on. And it does work. Regardless of common misconceptions such as “people will believe what they want to believe”, fact-checking has proved effective in stopping the spread of specific disinformation that would otherwise keep making the rounds.

There are of course hardcore partisans who refuse to recognize anything that challenges their views, but they should not be mistaken for the society as a whole.

Generally, fact-checkers are small organizations and we could use some help from the platforms as well: they can contribute to our sustainability but they can also make a difference in the effectiveness of our job. For instance, sharing quality data with fact-checkers would help immensely in order to understand how disinformation moves inside their sites and how our work affects its dissemination.

Both the European Commission and the European Parliament have declared the fight against disinformation one of the pillars of the incoming Digital Services Act. The debate has so far focused on new obligations for platforms and, while regulation must make sure they listen to and support relevant actors such as fact-checkers, the institutions themselves can do more. This new legislation ought to foster media literacy initiatives that educate the public and make it less permeable to disinformation. It should also set a higher bar for transparency in public institutions.

The incoming Digital Services Act will have profound implications in the health of public discourse in the European Union, in the quality of the information citizens will rely on when making decisions about our common future. Let us make sure legislation addresses those goals in an effective way.

*head of public policy and institutional development at Maldita.es, a European nonprofit journalistic platform and the most visited independent fact-checking website in the European Union

**first published in: www.euractiv.com

By: N. Peter Kramer

By: N. Peter Kramer