by Samuel Stolton

EU law enforcement authorities should make ‘significant investments’ into developing new screening technologies that could help to detect the malicious use of deepfakes, a new report from the bloc’s police agency Europol recommends.

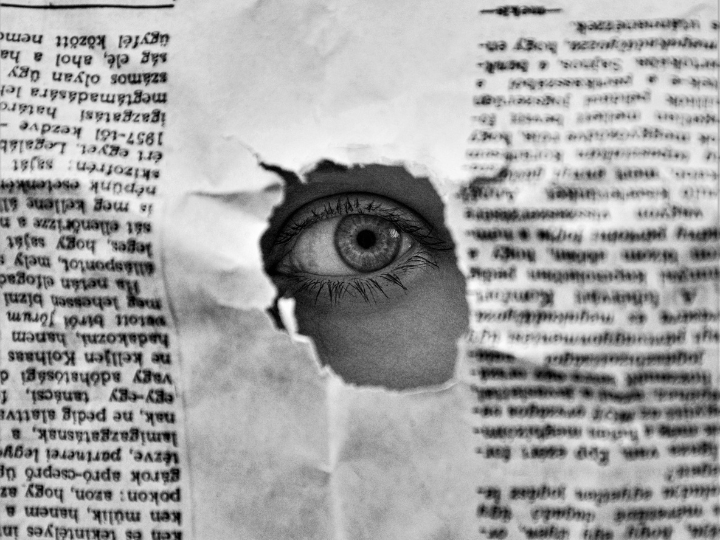

Deepfake technology involves the creation of synthetic media, generally video material, using artificial intelligence and machine learning tools which allow for an individual’s facial expressions and speech to be doctored to appear real.

High-level politicians have long borne the brunt of these technologies, with falsified videos being produced of German Chancellor Merkel, former US President Obama, and ex-Italian Prime Minister Matteo Renzi.

The purpose of politically-motivated deepfake videos is to stoke social unrest and political polarisation between online users, by way of delivering falsified messages from well-known leaders. Europol expects the means of those producing to become more technologically advanced in the future.

“The individuals and groups behind the abuse of deepfakes are expected to adapt their modus operandi with the aim of evading detection and training their models to follow counter-detection measures,” states Europol’s report, published on Thursday (19 November).

“Deepfakes can, in this regard, become a significant challenge to the current forensic audio-visual analysis and authentication techniques employed by industries, competent authorities, media professionals, and civil society,” the document, which examines the threat landscape for artificial intelligence technologies, adds.

In order to address the future challenges posed by the development of more sophisticated deepfake tools, the report, produced alongside the United Nations Interregional Crime and Justice Research Institute (UNICRI) and Trend Micro, recommends law enforcement agencies to establish more advanced means of detecting next-generation doctored video and audio content.

“It is necessary not only to make significant investments to develop new screening technology to detect tampering and irregularities in visual and audio content, but also to ensure that such tools are kept up to date to reflect evolving deepfake creation technology, including current and possible future malicious abuse of such technology.”

“In order to optimise the efforts and address the current gaps, the development of systems to combat deepfakes should be done in a collaborative manner between industry and end-users from competent authorities.”

Digital Services Act to clampdown on deepfakes?

In Brussels, the European Commission is putting the final touches to its upcoming Digital Services Act, an ambitious regulatory framework that will introduce new rules in areas ranging from content moderation to online advertising and the transparency of algorithms. The new rules will be presented by the EU executive on 9 December.

A recent paper funded by the German Foreign Office and discussed in the EU Council’s Working Party for Hybrid Threats states that the Digital Services Act could include ‘opportunities for regulation’ against ‘deepfake threats.’

However, it is unlikely that hard obligations on platforms as part of the Digital Services Act could impose rules on the removal of such content.

“In order to address disinformation and harmful content we should focus on how this content is distributed and shown to people rather than push for removal,” Vera Jourova, the European Commission Vice-President for Values and Transparency, said recently.

While there could be rules on clamping down on the virality of nefarious content such as deepfakes in the EU’s Digital Services Act, the Commission is also likely to address the challenge of dealing with such online material in the upcoming Democracy Action Plan, which will hone in on fake news in the context of external interference and manipulation in elections.

Jourova said as part of an online video conference this week that the new measures, to be presented by the Commission on 2 December, will reinforce the application of the EU’s code of practice against disinformation, a voluntary framework that introduces a series of best practices for signatories, including Google, Facebook, and Twitter, to abide by.

The code was introduced by the Commission in October 2018, in a bid to combat fake news in the context of the May 2019 European Parliament elections.

The initiative came in for heavy criticism earlier this year, with a series of EU member states calling the self-regulatory framework “insufficient and unsuitable.”

*first published in: www.euractiv.com

By: N. Peter Kramer

By: N. Peter Kramer