by Samuel Stolton

EU policymakers should put forward provisions as part of the forthcoming Digital Services Act that would permit platforms to screen uploaded content for both illegal and harmful content, a paper published by an international startup association has said.

Such “proactive measures” could help provide a certain level of “legal certainty” for tech firms, according to the paper, published on Tuesday (27 October) by Oxera consulting for the trade lobby group Allied for Startups.

The Digital Services Act (DSA) is the EU’s ambitious plan to regulate online services and will cover a range of areas of the platform economy from content moderation to online advertising and the transparency of algorithms. The Commission is due to present the plans on December 2.

According to the paper, which is supported by a contingent of global startup groups, a voluntary regime should be proposed as part of the measures whereby platforms have the right to monitor content through the use of algorithms with a view to detecting both illegal and “harmful” content.

In particular, the use of automated tools to screen content could help reduce costs for startups, the document argues.

Despite encouraging proactive screening of content, however, the association notes how the limited liability exemptions currently defined in the 2000 e-Commerce directive, should be maintained.

As part of the directive, platforms are exempt from legal liability in terms of what content users post online, until they are made aware of the content’s potential infringement, by which time they are required to act swiftly to deal with it.

Speculative modeling conducted by the authors of the report, Oxera consulting, shows that introducing more stringent liability rules for platforms in the Digital Services Act could lead to up to €23 billion in revenue lost for small businesses across Europe. This is due to the potential of increased fees being introduced by platforms as a result of such liability frameworks, the report states.

“Over 10,000 EU platforms serve markets and societies. Getting liability rules that work for these startups is in our broader economic interest and should be the central objective of the Digital Services Act,” said Benedikt Blomeyer, director of EU policy at Allied for Startups.

This is a point broadly supported by those in the industry who share the startup’s community’s penchant for introducing proactive measures into the Digital Services Act.

Yesterday, tech lobby EDiMA, representing some of the world’s largest platforms such as Google, Facebook and Amazon, released a paper supporting the introduction of a legal safeguard allowing companies to take “proactive actions” to remove illegal content.

The copyright nightmare returns

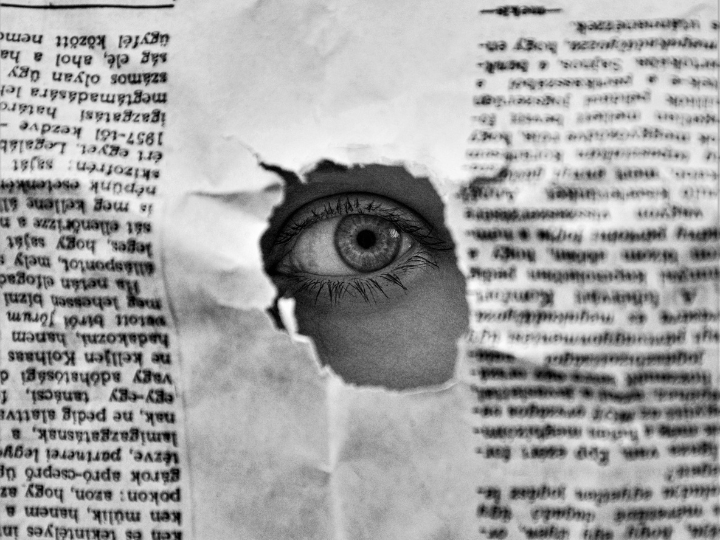

Jockeying over whether “proactive measures” should be introduced into the Digital Services Act echoes similar disputes on the subject during negotiations on the EU’s Copyright Directive. Whether to introduce “upload filters” proved a contentious issue in the directive, which was approved last year.

Article 17 of the directive obliges platforms to stop illegal uploads of copyright-protected material. However, the article left a lot of room for interpretation in terms of whether platforms would be required to use upload filters in the directive’s transposition, leading to a series of further consultations between the European Commission and representatives from national governments, civil society, and the industry.

Most recently, this has provoked concerns in Germany, where the country’s Justice Minister Christine Lambrecht put forward plans which include mandating the use of upload filters for platforms. This position goes counter to the government’s previous stance when they backed the new rules in 2019.

*first published in: www.euractiv.com

By: N. Peter Kramer

By: N. Peter Kramer